April 2, 2018

Augmented reality revolutionizes surgery and data visualization for VCU researchers

Share this story

The practical uses for augmented reality — which superimposes digital information onto real world surroundings — seem endless. Technologists have envisioned futuristic applications such as glasses that allow wearers to visualize turn-by-turn navigation in real time and immersive gaming headsets. Recently, scientists have focused on harnessing the technology for intellectual pursuits.

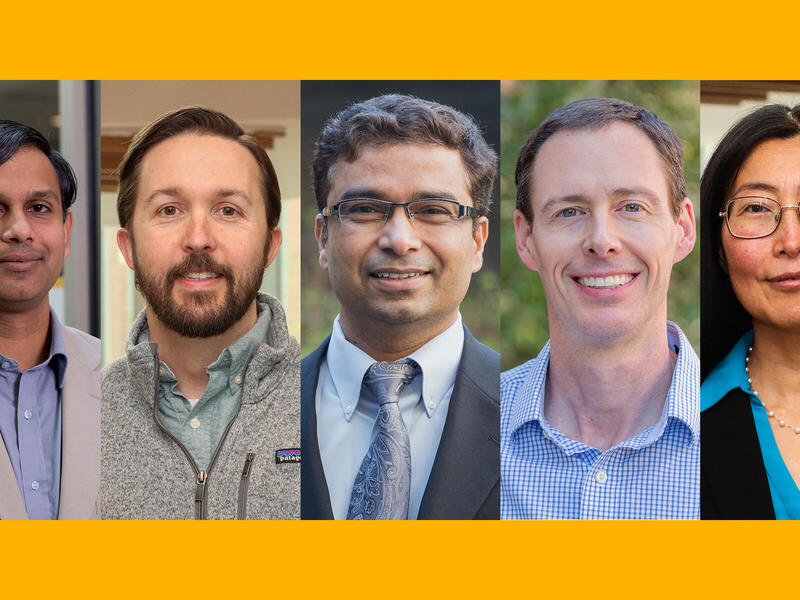

Virginia Commonwealth University researchers are leading utilization of AR for medical and research purposes. An interdisciplinary team of faculty and students led by Dayanjan “Shanaka” Wijesinghe Ph.D., assistant professor in the department of Pharmacotherapy and Outcomes Science in the School of Pharmacy, is developing augmented reality platforms that could improve surgical approaches, refine personalized medicine and serve as a research tool.

The MedAR program, which is optimized for the Microsoft HoloLens AR platform, renders 3D models of CT and MRI scans, and allows users to interact digitally. In February, VCU Medical Center surgeons used the application to prepare for two complex cardiac surgeries. Another version of the program is frequently employed to create 3D models of complex biochemical networks for scientific research.

“Our technology has the capability to democratize medicine across the globe,” Wijesinghe said. “The 3D surgical and biochemical network models can be shared worldwide for collaborative planning of complex surgeries and research.”

The operating room

In many respects, the AR application surpasses detailed 2D medical imaging in its ability to offer surgeons realistic presentations of anatomy, said Daniel Tang, M.D., the Richard R. Lower Professor in cardiovascular surgery in the VCU School of Medicine.

Tang is the surgical director of heart transplantation and mechanical circulatory support. He and his team recently donned headsets to prepare for two surgeries, one to mend a central portion of the heart and the other to repair leaks around two artificial valves.

The surgeons used 3D reconstructions of CT scans generated by MedAR to view areas of concern in the way they would appear on the operating table. Programmed verbal commands allowed the surgeons to rotate the models, move them and cause some parts to go transparent to view hidden anatomy.

“It really gives you a sense of where structures lie in relation to other structures while planning operations,” Tang said. “It’s particularly helpful for trainees who are still learning to translate the preoperative screen imaging to the live intraoperative findings.”

Tang expects the technology to improve alongside that of medical imaging and AR hardware.

“Augmented reality represents a leap forward,” Tang said. “When physicians went from plain film X-rays to digitized CT scans, we were provided with more detailed images.”

The majority of this information is still displayed in 2D slices, and 3D reconstructions of the images require further development. However, the VCU teams’ interactive, 3D models present an intuitive imaging platform that surgeons can use to plan operations, and as a tool to educate patients on their disease process.

Wijesinghe envisions more ambitious surgical applications for the technology. His lab is working to develop an experience that is shared in real time between users during surgery and remote users, with the option of video and voice recording. Eventually, he aims to expand the software to overlie scaled 1-to-1 images on top of the operative field to provide supplemental information in real time.

“Being able to overlay the 3D virtual reconstruction on the patient is akin to providing something like X-ray vision to the surgeons,” Wijesinghe said. “They will be able to see the patient, but also the structure underneath that they need to reach during the operation.”

Wijesinghe and Tang also collaborated with Vig Kasirajan, M.D., the Stuart McGuire Professor and VCU Department of Surgery chair; Alex Valadka, M.D., professor and VCU Department of Neurosurgery chair; and the VCU da Vinci Center to perfect the technology.

Rendering biochemical networks

The research initiative stemmed from the need to understand biochemical pathways and interactions. This helps scientists explain and predict cellular functions that impact biological mechanisms such as disease progression and metabolism.

Biochemical networks, which are graphs scientists use to visualize biochemical interactions, provide the key to understanding biochemical pathways.

Data points called nodes represent molecules such as enzymes or metabolites. Lines drawn between the nodes define how the molecules interact.

Biochemical networks allow scientists to see the bigger picture but they take up an enormous amount of space when displayed in 2D, which makes AR’s 3D capabilities convenient.

“The issue we were running into is that complete biochemical networks are extremely complex, so no matter how much they are magnified, we cannot even display the data on multiple screens," Wijesinghe said. “We needed a different technology and it made sense to generate immersive biochemical networks that are not limited to screen space.”

The system has some similarities to existing technologies, Wijesinghe said, but costs much less and is much more portable.

Wijesinghe’s next steps are to apply the AR technology’s biochemical network visualization capabilities to personalized medicine. The goal would be to visualize the effect of drug interactions on biological mechanisms within a patient profile.

For example, a scientist could use the technology to visualize a drug’s ability to improve an individual’s metabolism. Biological indices would be obtained from the patient to create the biochemical network, and drug molecules and metabolites would be represented by nodes. Scientists would use various visual aids, such as affected nodes going dark, to predict drug interactions.

“We solved the problem of visualizing networks,” Wijesinghe said. “Now, what we are working on is visualizing how specific drugs can impact an entire network.”

Subscribe to VCU News

Subscribe to VCU News at newsletter.vcu.edu and receive a selection of stories, videos, photos, news clips and event listings in your inbox.