June 20, 2024

What will it take for artificial intelligence to be safe and secure? VCU expert considers election integrity and other threats that lie ahead.

Share this story

Technology in elections became a hot-button issue in the 2020 presidential race, and with the explosion of artificial intelligence in recent years, it again will permeate the political landscape in this year’s contest.

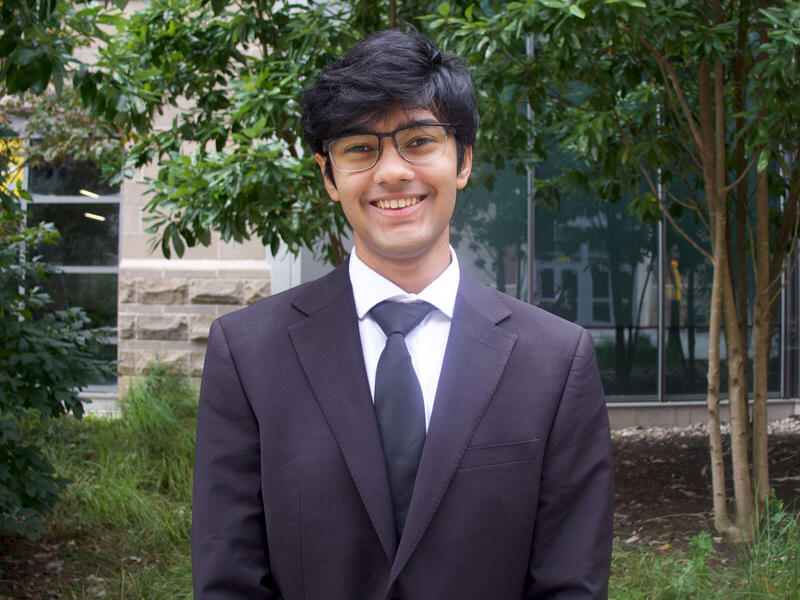

For Milos Manic, Ph.D., director of Virginia Commonwealth University’s Cybersecurity Center, the impact of AI on undecided voters is of particular concern.

“Will the undecided portion of the electorate be targeted in a certain way? Will they be fed carefully chosen information and specific misinterpreted information?” he asked.

Manic, a professor in the Department of Computer Science at VCU’s College of Engineering and an inaugural fellow of the Commonwealth Cyber Initiative, has completed more than 40 research grants in the area of data mining and machine learning applied to cybersecurity, infrastructure protection, energy security and resilient intelligent control. In April, he received the FBI Director’s Community Leadership Award for advancing AI to protect the nation’s infrastructure from cyberattacks.

VCU News asked Manic for insights into the intersection of AI, elections and society overall.

What are key vulnerabilities we face in terms of election integrity?

Social media can very quickly and easily give an illusion of group consensus – people thinking the same way – while sometimes 90% of those instances may not be the case. Human psyche, human psychology, human vulnerabilities – this is where the battles are being waged now.

Psychological warfare existed for hundreds of years. In the world wars, it might have been a person that was given a pamphlet to read to a whole village. It might have been pamphlets that were thrown out of planes. Now it’s psychological warfare through technology. Humans have so-called emotional triggers, something that they react to. Humans have cognitive biases.

Are there ways we could address such shortcomings quickly even if imperfectly?

We could look at four areas. The first is policy. The second is safe and secure AI. The third is user awareness. And the fourth is looking across borders and forming alliances.

In policy, the whole world came late into the AI game. Now a number of organizations –governmental and nongovernmental, and nonprofit – are trying to deal with policy, governance and regulatory frameworks and so on.

With current resources, algorithms or knowledge, there’s very little we cannot do given enough time. So the question is, are we developing this in the right way for the right reasons?

Six or seven years ago, we looked at different algorithms to solve some cybersecurity problems, and we showed that for almost any kind of problem, we can find at least three algorithms that will solve it correctly. The question is, will that solution hold when you put it in production? Are we looking at transformations that will matter in the future as they matter today?

What about those other three areas?

For having a safe and secure AI, a key question is: Can we focus on ethical, unbiased, trustworthy developers?

The next step is the users – the public awareness and understanding. What are we posting? What are we sharing, or what are we making available to others? And how do we know whom we are making it available to? Facebook users may say, “I know who I’m sharing with.” No, you don’t. You think you do. But how do you know that your friend was not compromised and became someone else?

And then the next step is going beyond borders. In the cyberworld, there’s something called the Five Eyes [Australia, Canada, New Zealand, the United Kingdom and the United States] forming an intelligence alliance. Enemies are now based off of computers or networks that might be continents away. So if there’s no alliance to communicate across, it will be very easy for nefarious actors to invade.

Are you doing work that intersects strongly with election integrity or political disinformation?

The key here becomes real-time AI fraud detection. AI is very powerful when you have enough resources or computer resources. There’s almost nothing we cannot do if you have enough time and data. But the question is, can you do it in real time?

VCU has been the lead of the statewide initiative CCAC — the Commonwealth Center for Advanced Computing. The centerpiece of our center is the IBM z16 machine. It is one of those supercomputers based on on-chip AI, specifically designed for real-time AI for fraud detection. There’s so many capable machines these days in industry and academia, and so many algorithms are so powerful. But the question is, can you do it real time? VCU is the lead and the steward of this state resource.

As AI grows in its ability to create comprehensive texts and realistic images, how would you assess its potential to threaten (or protect) the political or electoral process?

Again, the key is, can you use AI in real time to achieve something? Because all of these attacks or fraud — and the same thing with disinformation if you allow it — happen in real time. If you allow it enough time, coming back with a response a week later almost doesn’t matter, because that opinion already influenced people. It’s almost the same as not reacting at all. It’s too late to change people’s minds.

One thing that worries me is these machines could become smart enough to tailor their humanlike responses to a user. So they will have a different way of presenting the same information to me and a different way of presenting it to my daughter, for example, who is way younger, because her perception of what’s real will be very different than my perception. So the ability of a machine to learn and adjust in real time is what’s scary.

We need to focus on human psyches, human vulnerabilities. What are we susceptible to? It’s not engineering and computer science alone anymore. It’s human factors, psych experts and so on.

Even beyond elections, what keeps you up at night related to AI at the moment – and what helps you sleep more easily?

It’s a moving target. The problem is that the moment the nefarious actors figure out what we can detect, they will work [to make deep fakes] even better.

Some say we need to be developing better tools. Then some others say, well, who guards the guard? Who will vouch for the vetting tool that it has not been compromised? But this has been the case in the cyberworld forever. It’s cat and mouse. So I have zero doubt that this will not continue. But I also have zero doubt that the good actors will continue not sleeping at night.

Real-time detection is going to be the key for years to come – developing faster and faster solutions that will, within nanoseconds, be able to detect deep fakes and provide a vetting process that will be tied to whatever tools we are using. If we are using a browser — Chrome, Firefox, Safari — we will have tools that will real-time flag it in some way.

Humans are vulnerable, and this is what keeps me awake. How do we keep up with these changes and, in an unbiased way, make a decision? We are taking a lot of practices from the cyberworld into AI.

I’m more worried about humans making a decision at the last moment because something happened. I think this is where the biggest fight is today. They will change their opinion based on something that they’ve seen, heard or been told and so on. So human vulnerability, human psyche – that’s where the cyberwars will continue to be. But I’m worried less about the technical side. It’s the humans where it all starts and ends with.

Subscribe to VCU News

Subscribe to VCU News at newsletter.vcu.edu and receive a selection of stories, videos, photos, news clips and event listings in your inbox.